Stav Cohen is a Ph.D. candidate in the Technion’s Faculty of Data and Decision Sciences. He is researching generative artificial intelligence (GenAI) methodologies, with a specific emphasis on security and operational aspects. He recently discovered, or rather created, a potential threat to GenAI systems.

GenAI uses artificial intelligence to create unique content, including audio, code, images, text, simulations, and videos. This technology is becoming increasingly popular. It’s used to automatically make calendar bookings, create chat boxes on websites, write poetry and love songs, and more.

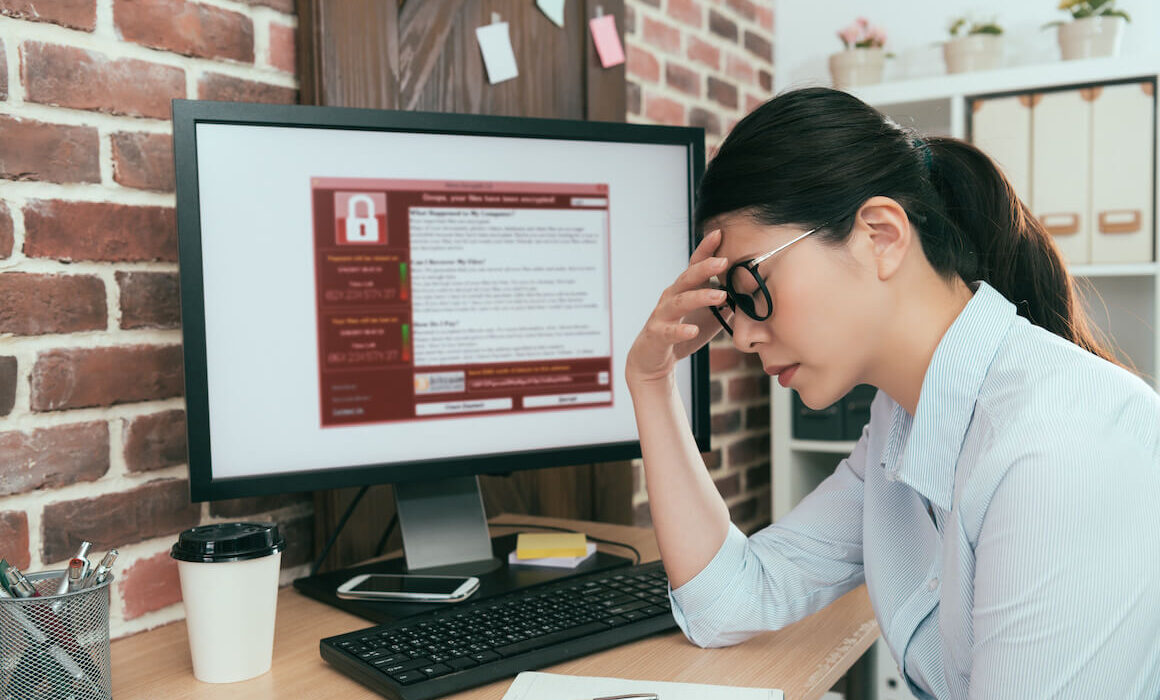

But as these tools become more powerful and “intelligent,” there is also an increased danger for them to be abused. While previous researchers highlighted risks with specific GenAI agents, a question remained if entire GenAI ecosystems could be exploited. It turns out they can.

In research that began during Cohen’s master’s internship this past summer at the Joan & Irwin Jacobs Technion-Cornell Institute in Manhattan, he and two other cyber security researchers created the first GenAI worm that can spread among AI ecosystems. The zero-click worm is capable of stealing data, spamming email, or deploying malware.

It was created in a safe lab environment and has not yet been seen in the real world. But the researchers warn it’s just a matter of time before malicious actors learn how to exploit the systems.

They call the new worm Morris II, as a nod to the original Morris computer worm that caused havoc in the nascent internet of 1988. In their experiment, Morris II was able to infiltrate the three most popular GenAI tools — ChatGPT, Gemini, and LLaVA — by deploying an “adversarial self-replicating prompt.”

According to the researchers’ abstract, “The study demonstrates that attackers can insert such prompts into inputs that, when processed by GenAI models, prompt the model to replicate the input as output (replication) and engage in malicious activities (payload). Additionally, these inputs compel the agent to deliver them (propagate) to new agents by exploiting the connectivity within the GenAI ecosystem.”

While the researchers expect the worm to be a real threat in two to three years, they also suggest ways to mitigate its impact.They are working on expanding the research and submitting the findings to industry and academic conferences so people designing AI assistants can address the flaws. And security experts caution that human control should never be eliminated. For example, AI tools should not purchase items or answer emails without the user’s knowledge.

“By exploring innovative ways in which GenAI can be integrated into cyber-physical systems (CPS), I aim to identify novel approaches that not only fortify security measures but also optimize the overall performance of CPS in collaboration with human interactions,” said Cohen.