Cells in Motion — Now in Super Resolution

Microscopes have long been invaluable tools for biologists but getting a clear look at the smallest sub-cellular structures has always been tricky. In recent years, scientists developed a method to obtain a single super-resolved image of the sub-cellular structure by capturing a video instead of a single shot. This comes with a price of longer acquisition time and requires cells to be artificially still. Now using AI, Technion scientists have found a workaround that can overcome these obstacles.

This new technology constitutes a major leap in biologists’ ability to study living cells, allowing researchers to observe and better understand the inner machinery of cells and organelles within them.

To find what they’re looking for under a microscope, biologists commonly use florescent dyes to stain specific structures of interest, making them easier to see. However, achieving imaging resolution smaller than 200 nanometers, or approximately half the wavelength of visible light, is impossible using standard optical microscopes.

In 2014, scientists from the U.S. and Germany won the Nobel Prize in Chemistry for developing “single-molecule localization microscopy” (SMLM), which relies on making a video of the fluorescently labeled samples rather than a single image. In each frame, only a few individual molecules emit light, creating a sparse speck pattern. Each speck is localized in high resolution and the localizations of the entire video are stacked together to form a high-resolution image.

But long exposures of more than a minute are required to generate a single high-resolution image, so the cell must be fixed — like olden-day photography where subjects needed to stand still to produce a sharp picture. Scientists, however, need to see cells in their natural state, moving and responding to stimuli.

“Things move in a living cell, but they move with a certain regularity,” said lead researcher Professor Yoav Shechtman. “If we look at microtubules, for example, they are sort of like threads, bound together into a mesh. They move, but you don’t have bits of them randomly hopping about. There’s a pattern to the movement.”

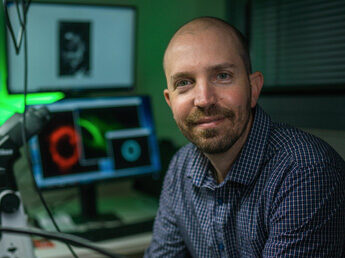

So, Prof. Shechtman and his team in the Faculty of Biomedical Engineering, led by Ph.D. student Alon Saguy, trained artificial neural networks to find patterns in the SMLM videos. The AI networks would receive the recording frame by frame, each frame showing only a few spots of light, and produce a continuous video of the structures behind those spots. Their methodology, recently published in Nature Methods, allowed the group to visualize smaller, multiple cell structures in their natural movement at a resolution of 30 nanometers and orders of magnitudes faster than the original SMLM method.